Cast Spells on Product Discovery

Prototype Testing Techniques: Accelerate Your Product Discovery with Validated Learning

Finding effective methods for testing prototypes is critical for any initial product discovery. This guide discusses how product teams can test their prototypes ranging from low fidelity sketches to high-end interactive simulations. These methods can help teams validate their assumptions before developers rush to allocate resources during development.

For large product development endeavors, features built without validation come at a huge cost. Investing in prototyping and testing builds a critical bridge between users' needs and solutions. Solutions, uh, let’s say supplied aids, allow teams to validate assumptions, refine concepts, and build confidence prior to undertaking full-scale investment in development.

The Product Development and Management Association indicates that products undergoing rigorous testing in terms of them prototypes stand a 37% higher chance of achieving success in the market while requiring 43% less revisions post-launch. Despite these remarkable figures, many teams in product development opt to cut corners with the testing processes rushing from idea to development.

The Strategic Value of Prototype Testing in Product Discovery

We will now dive into the topics in relation and understanding the ROI of utilizing rapid prototype testing in product development. This involves testing a specific concept at the time when changes can be made the easiest, coupled with capturing objective data that can facilitate decision making in regards to determining critical product choices.

Aligned with the objectives of product testing wherein the aims and goals of testing extend well beyond assessing whether or not users prefer a design, Strategic prototype testing seek to address overarching issues that inform all-course product development routes.

Risk Reduction Through Validation

Product initiatives always come with a healthy mix of uncertainty be it concerning the user, technology, or cost. The use of prototype testing strategically alleviates these concerns by validating the most basic yet crucial assumptions prior to getting into major investments.

This is where expert product management suggests that "Prototype testing transfigures opinion, and assumption into evidence alongside insights," further reiterating the necessity of reasoning proclivity that eliminates the risk of building features that would typically be perceived as either unnecessary or defunct by the target audience.

Accelerating Learning and Generating Insights

Prototype tests, when conducted with the right thought out processes, provides revelations that far exceed the expectations of stakeholders. While watching users interact with prototypes, teams often notice:

Cognitive Deconstruction – Prototyping Confusion and Conflict Demystification

Mental models which users possess are unlike the structures which features are built on, leading to architectural feature biases.

Integration potential in workflows which reveal integration opportunities

Necessary linguistic changes due to confusions of terminology

Prioritization decisions driven by perceptions of feature value

All such discoveries come when users navigate toward ideas through tangible artifacts rather than abstract dialogue. Jakob Nielsen, a UX pathfinder says, “Users don’t know what they want, but they recognize it when they see it.”

Supporting Decisions and Obtaining Consensus

Prototype testing produces robust anchors for decisions and strengthens agreement among stakeholders, which enhances alignment. In cases where different divisions hold contradictory views concerning the feature’s design direction, test findings offer compromise.

Entire development philosophies have been built around these within companies as Intuition. Under their “Delight When You Design It” guideline, prototype testing is done mandatorily on major features, and teams are aligned on needs validated through the tests.

Matching Prototype Fidelity to Discovery Questions

For effective product discovery, the right prototype fidelity level must be chosen. Every varying goal has associated prototypes, which range from simple paper sketches to fully interactive digital experiences.

Prototype testing includes a fair share of blunders, the most common being creating prototypes that are exceptionally elaborate, leading to wasted time and resources. The balance rests on matching the fidelity of the prototype to the objectives.

Low-Fidelity Prototypes in Product Discovery

Low fidelity prototypes include wireframes, paper sketches, and clickable mockups which are particularly useful in validating information architecture as well as workflows. They are best for early-stage product development, especially when there are different approaches to consider.

Low-Fidelity Prototypes can be used to:

Verify core value propositions

Validate general workflow models

Test several solution pathways

Verify information architecture at a structural level

Validate terminology alongside mental models

Most important benefits of low fidelity testing:

Low creation time (hours rather than days)

Simple to revise post feedback

Disregard details to focus on the concept

Less fixation on problem specific solutions

No design feedback regarding visuals required suggests offering feedback is a different focus altogether

Google Ventures’ design sprints state that even rough prototypes can test approximately 80% of crucial assumptions in regards to user behavior and feature utility. Their research found that increasing fidelity beyond what is stipulated rarely yields additional insights.

Medium Fidelity Prototypes for Concept Validation

Prototypes that use medium fidelity feature greater visual accuracy, more content relevant effects, and add interactivity. Figma, Sketch and Adobe XD are unrivaled when it comes to making prototypes that feel prototype-like but do not use development resources.

When to utilize medium-fidelity prototypes:

Validating exact interaction traits

Evaluating information architecture and visual layout

Checking content sufficiency and clarity

Understanding navigation concepts at the fine detail level

Confirming distinct user flows

Shopify, an e-commerce industry leader, utilizes medium-fidelity prototypes to test merchant-facing features prior to development. Through utilizing medium-fidelity prototypes, product teams at Shopify found that medium-fidelity proving to be the ‘sweet spot’ between effort and insight for many test objectives.

Advanced Testing with High-Fidelity Prototypes

High-fidelity prototypes are the most resource-consuming type and resemble the final product the most in look and function. After spending the resources to build them, companies know the estimates of user behavior interactions with the interface will be accurate to the reality meaning predictive accuracy is very high.

When to utilize high-fidelity prototypes:

Detail-specific interaction validation

Interaction performance evaluation

Visual appeal and brand alignment check

Accessibility standards validation

Last validation pre-development phases

Unlike many companies, Airbnb focuses high-fidelity prototypes solely on critical user flows. The company believes that minute details of interactions with the system heavily influence the user experience, and through that, efficiency stands to reason to use high-fidelity selectively investing financial resources on the most crucial or novel interactions.

Important Prototype Testing Methods

Achieve the most productive results in your product discovery efforts by mastering these established prototype testing methods. They include traditional usability testing or more modern remote ways, and they all provide rigorous validation of product ideas.

Moderated User Testing for Comprehensive Insights

Usability testing with a facilitator—where a moderator assists participants as they interact with a prototype—continues to be the best option for acquiring rich, contextual insights. The facilitator is able to probe cognitive steps, provide clarifying questions, and follow-up on surprise reactions in real time.

Best practices for moderated usability testing:

Develop a clear and flexible outline for the test script

Start with context-setting questions that focus on user-relevant past experiences.

Incorporate critical tasks which test key assumptions.

Incorporate the use of think-aloud protocols to capture the rationale behind actions.

Provide open-ended follow-up questioning to address observations made.

Prioritize actions over posed statements.

Incorporate time for general comments and impressions.

Intuit Inc., a global financial software corporation, employs a method they call “Follow Me Home.” product managers watch as users work with prototypes in the user’s workplace. This contextual method provides insights which would not be gained from lab-based testing.

Unmoderated Remote User Testing for Enhanced Scope and Diversity

Unmoderated remote testing for scale and diversity Platforms like UserTesting, Maze, and UsabilityHub offer unmoderated testing services which allow teams to efficiently test prototypes with hundreds of users. While this method does not provide the richness of nuanced insight obtained from moderated testing, it does offer statistical confidence and evidentiary patterns across diverse segments.

Unmoderated testing is most effective when following these guidelines:

Define tasks that are easy to understand and complete with a clearly defined success criteria

Ensure that the tests are designed to be completed within a 15-20 minute timeframe

Include follow-up questions that can be answered in short form after each task

Use quantitative measures including task completion, and time on task

Demographically diverse participants should be included

For statistical reliability a minimum of 30-50 users should be included

Qualitative feedback should be combined with quantitative measurement

The product team at Slack is known to use unmoderated testing to validate feature confidence. As one product researcher stated, “For us, when unmoderated testing shows us over 80 percent success rates with diverse users, we know we’re doing something right.”

Support for Decisions through Comparative Testing (Decision Support)

When teams have several potential solutions to one problem, it is comparative testing that offers straightforward evidence to help with decision-making. As part of the testing, different versions of a prototype are presented to the participants in order to uncover their preferences alongside performance differences.

Maximizing the value of comparative testing:

Test interchangeable functionally equivalent prototypes

Randomize the presentation order

Identify tasks which will repeat across versions

Collect both absolute measures of performance and preference indicators

Do not disclose the existence of competing designs that may be related.

Issue requests for comparison feedback only after all iterations have been executed.

Give emphasis to objective pre-defined measures rather than subjective ones.

As part of their critical user workflows, financial software company Quickbooks employs comparative testing heavily. Working product managers claim that stakeholders disagreements about a particular design direction are resolved effectively using comparative data.

Wizard-of-Oz Testing for Complex Functionality

For features with complex backend logic, “wizard of oz” testing permits observing user interactions in real-time without designing and building the entire system. The user-facing components look automated but a human behind the curtain “wizard” provides the system's responses.

Best practices for wizard of oz testing:

Create response protocols for consistent simulations.

Train multiple ‘wizards’ to mitigate bias.

Define appropriate timing for response windows.

Anticipate common edge cases and error scenarios.

Preserve the perception of automation during testing.

Capture every assumption made while simulating.

Document constraints of the technology which were exposed during the tests.

This was a focus approach taken by pioneering AI assistant X.ai when developing their scheduling assistant. They could test complex dialogues without committing to AI development first, which allowed them to validate intricate conversational interactions.

First Impression Testing with Five Seconds of Focus

In five second tests, participants are showed a prototype for 5 seconds and then asked what prototype elements they remember and what those elements mean to them. This method can assess the effectiveness of comprehension, communication of value, and first impressions.

Dos and Donts of five second testing:

Concentrate on a single screen or view.

After exposure, ask what elements were recalled.

Check whether the purpose and function of the subject matter is understood.

Enquire about the actions or the next steps they were expecting to take.

Test different versions to measure for effectiveness.

Emotional response questions should be included.

Conduct with 15–20 participants per version at a minimum.

These value proposition communication techniques have proven useful for focus testing advertisement effectiveness with five second tests. Their users who npot quickly grasping the point of a product showcased within the ads, tend to have a heightened bounce rate.

How to Effectively Set Prototype Tests

Go through the following steps to get the most out of your prototype testing: With the right steps, ensuring useful feedback that moves product discovery can be made.

Have Focused Learning Goals.

Defining tasks around a goal aims to address pre-existing challenges in a prior test. Goals, however, are not inherently helpful, as they may lead to vague, superficial insights rendered useless as focus is misaligned.

As a first step, consider what specific questions your assessment needs to address:

“Can users independently complete the checkout process?”

"Do users filter search results through our new interface correctly?"

"Which of our three new navigation approaches do new users find most intuitive?"

These objectives should derive from the key assumptions you pointed out in the previous efforts of the product discovery process. In Torres’s, “Continuous Discovery Habits,” she claims the most impactful test objectives focus on an assumption that is both high in impact and uncertain.

Representative Participant Recruiting

The insights gained from testing your prototypes are as good as the participants chosen. Acquiring the wrong testers will lead to skewed results and greatly misguide product development.

For most products, try to locate:

Current or prospective customers who fit within your target segments.

Participants with different degrees of domain knowledge.

Individuals from different demographics.

Differences in technology-related comfort levels.

Novice and advanced users, if applicable.

The Nielsen Norman Group recently conducted research that says testing with only five users within the same segment will identify around 85% usability issues. But when testing multiple user segments, five participants from each distinct group are needed.

Creating Effective Test Scenarios

Well crafted scenarios assist users in contextualizing your prototype and engaging with it in a realistic manner. An authentic product simulation is provided via heuristic evaluation prototype which is advanced by fostering authentic attitudes and feedback.

Most effective test scenarios:

Align with a real user’s motivation and goals.

Provide context, but are not overly prescriptive.

Do not use technical language with the exception of expert users.

Contain realistic limitations and considerations.

Do not indicate the “correct” answer or solution.

For instance, rather than saying: “Click the filter button and select ‘price low to high,’” you would say: “You’re shopping for headphones and wish to see the most affordable ones first. Demonstrate to me how you would execute that."

Establishing Measurement Frameworks

Without a doubt, qualitative observations yield rich insights, but the ability to compare them with set progress goals provides objective advancement tracking. Define measurement frameworks prior to conducting the tests so that uniform data collection can be achieved.

Some of the more common prototype testing metrics are the following:

Task completion rates (% of users that accomplish the specific task within the allotted time)

Time on task (duration of task completion)

Error rates (measured mistakes made while executing tasks)

Assistance requests (where help is solicited)

Subjective satisfaction scores (satisfaction ratings immediately after a task)

System Usability Scale (SUS) scores for general usability

Net Promoter Score (NPS) rate for general experience evaluation.

To aid teams in monitoring advancements on prototypes across iterations, Adobe, a software company, developed a "Clarity Index" that merges these metrics into a single score. This enables teams to make data-driven decisions on whether the prototype is ready for development.

Analyzing and Acting on Results from Testing Prototypes

Triggered product changes from prototype testing by analyzing raw data using the methods below. For product discovery workflows, interpreting test results effectively should be step number one.

Identifying Patterns in Multiple Test Sessions

Single test sessions offer useful stories that provide glimpses in the broader user experience. The patterns that emerge from analyzing multiple cases are the real treasure. Additional sessions enable the processor to distinguish issues that are mere quirks from those that are genuine challenges that require resolution.

Effective pattern analysis encompasses:

Classification of observations (confusion, error, delight, etc.)

Mapping issues to corresponding interface components or workflows

Estimating the occurrence of like observations

Dealing with critical versus minor issues

Associating patterns with user demographics

At Microsoft, product teams are taught to use a method called “affinity diagramming" for faster pattern detection. After testing, everyone shares their thoughts on sticky notes which are organized into themes or crucial areas needing specialized focus.

Signal Versus Noise

Not every detail in tests can be treated with the same importance. The greatest challenge is distinguishing value points from breaks, identifying things with huge softer impacts, and those that are mere inconveniences.

Use the following criteria to evaluate the observation impact:

Achievement of primary user goals

Distribution among participants

Degree of impact (unpleasant but easy vs impossible tasks)

Business alignment

Ability to respond to business logic directly impacts goals set during planning sessions/ defining success measures.

Anticipated effort required to provide issue resolution

Smooth transition and foundation with existing frameworks and established mental models.

According to Jake Knapp, the Google Ventures Design Sprint author, suggests which he calls the “two-thirds rule.” This means that when two-thirds of people are having the same problem, it is highly likely an issue that needs to be fixed.

Detecting Problems and Opportunities

After recognizing the patterns, issues with the highest impact and effort must be prioritized. This ordering ensures that as the prototype is refined in later stages, the most important issues are addressed first.

Some of the best prioritization methods are:

Matrices of Impact vs. Effort.

Criticality of the user journey. (High priority issues in primary flows)

Alignment with business value.

Technical dependencies (problems that influence multiple domains).

Alignment with experience principles.

At Spotify, there is a product – oriented approach where teams are given a “Severity Rating” system, scoring them from 1-4 based on frequency and user impact, allowing them to set a clear path for iteration.

Revolving Improvement Processes

Testing prototypes tends to not be a onetime occasion. The best in the industry have rapid iteration cycles, where they use the outcomes of one round of testing to adjust the prototype for the next round.

Best practices for iterative improvement include:

Establishing time-boxed review sessions for each round of testing

Centering each round around the testing of specific hypothesis.

Keeping records of all prototype changes and the unique “changelog” created for each build

Maintaining certain elements constant across iterations in order to manage the changeable factors

Fresh users for every test round to eliminate learning effects

Analyzing the results from multiple iterations of the same test and identifying changes made as improvements

Square's financial service platform runs “Testing Tuesdays,” where product teams do prototype tests on Tuesdays, analyze them on Wednesdays, make adjustments from Thursday to Monday, and then test again the next Tuesday. This structure provides ongoing learning and refinement throughout product discovery.

Common Prototype Testing Mistakes

Avoid these low-hanging mistakes that devalue prototype testing in product discovery. Understanding these mistakes allows you to implement better testing techniques.

Leading Users with Fixed Results

This is, unfortunately, a very widespread mistake. One of the biggest errors is leading users through destructive paths during testing. Facilitator bias originates from subtle cues.

Some suggestions indicate problematic leading. Examples may include:

Making suggestions such as “Have you tried clicking the button in the corner”;

Showing approval when users are taking the expected routes;

Intervening too early to give help before users actually struggle with the tasks;

Task instructions are given using the system's buttons and interface language.

Showing emotions when users are engaged with their tasks and struggling with them or performing well.

As per a study conducted by the University of Michigan’s User Experience Research Laboratory, it has been brought to light that moderator bias can artificially inflate the success rates of task completion by as much as 30 percent, which in turn results in dangerously misleading testing outcomes.

Testing After Significant Portions of the System Have Been Developed

The importance of testing a prototype drop significantly as work on it progresses. If a team only starts testing after a significant amount of coding work has been done, they reach a very challenging crossroads, either ignore what is usually regarded as critical usability shortcomings or undertake expensive rework.

Some problems associated with testing too late include:

Defensive posturing that inhibit achieving meaningful change;

Decisions influenced by sunk cost fallacies;

Suboptimal compromise solutions that are only addressing the symptoms of the problems;

Objectives-based schedule pressure to accept poor experiences;

Too few levers of improvement leading to stringent bounding of changes one can make.

Through IBM’s design thinking analysis, it has been revealed that failing to resolve a usability issue before the develop stage incurs an additional 100 times the cost in comparison to attending to it during prototyping. Additionally, the company’s findings indicate that every dollar invested in early usability tests payouts between 10and10and100 in reduced cost associated with development and ongoing system support.

Concentrating on Opinions Instead of Actions

Feedback based on a user's like or appreciate a model design often leads to inaccurate responses. Users often express thoughts that catalog their actions and words as two very different competitions, and claims uttered do not necessarily point toward meaningful actions.

Eyes are much more valuable than opinions to the following pointers:

Users staring at particular sections of a screen for a set duration of time.

Users performing selected actions while ignoring others or doing nothing at all.

Users who experience workflows stalling for some period of time-hesitation.

Users staring at particular screens for a set duration of time.

Users performing selected actions while ignoring others or doing nothing at all.

Users identifying conflicting terms for certain activities.

Tasks associated with errors or faults and may need some help to perform them.

Lack of trust displayed during particular interactions.

Older users did trust emotional engagement most leaving a gap for the younger ones during focus approach, blocking option claiming preference behavioral feedback. Director of research stated, “Effectively users are touchy with explanations but strict on conclusions. Watching judgments creates anticipations.”

Taking Bad User Feedback Too Politely

We can blame prototypes for a quiet participant claiming they work well. Always attempting to meet a researcher destroys them because those are operating feedback or thoughts that violate us do not require analogy of interpersonal disguises. “I think you should receive nascent satisfaction.”

Caution signs that indicate politeness concealing problems are:

Generic praise with no clear justification

Long pauses followed by claims of completing the task

Self-deprecation over challenges encountered (“I’m just not very technical”)

Understating clear puzzlement (“I’m sure I’d figure it out eventually”)

Contradictory nonverbal feedback

To combat this issue, Google’s more seasoned researchers actively seek such feedback, saying, “We’re not testing you. We’re testing the prototype,” and, “Finding problems now allows us to make better products for everyone.”

Misaligned Testing Areas of Focus and Fidelity Levels

Many teams burn through resources focusing on the wrong fidelity level because of how mismatched their testing priorities are. For example, gathering feedback on basic visual elements for wireframe prototypes or testing advanced workflow interactions for high-fidelity versions.

Missing these testing priorities also includes:

Gathering UI color and typography feedback on wireframes

Validating base concept with polished prototype

Performing interaction simulations for content clarity assessments

Assessing content clarity with lorem ipsum placeholders

Measuring emotion elicitation on non-designed interfaces

The user experience consultancy Nielsen Norman Group suggests “fidelity alignment”—where test questions match the prototype’s completion level—to avoid disproportionate costs and unnecessary complexity. Their work shows unalignment increases spending and confusion without generating proportionate insights.

Incorporating Prototype Testing into Your Product Discovery Process

Integrate the following strategies to make prototype testing effortless during your steps toward creating a product. The discovery process benefits from systematic testing integration, which provides a managed stream of learned insights that have undergone validation.

Establishing Testing Cadences

Having testing rhythms ensures validation becomes something done regularly rather than something extraordinary. Create predictable rhythms so that teams don’t need to conceptualize elaborate plans around prototype testing.

Some of the most Successful testing cadences are:

Weekly "testing Thursdays" with recruited admirers

Bi-weekly deep-dive meetings for important features

Monthly benchmark testing against set goals

Quarterly full user journey holistic assessments Random “guerilla testing” for fast low-detail feedback

The team behind Confluence and Jira, Atlassian, have a "persistent testing lab." This is an ongoing prototype testing lab that uses minimal lead times for session scheduling, allowing teams to validate frequently.

Creating Collaborative Testing Environments

Insights are shared actively through the company when prototype testing is performed as a team effort, not an individual researcher’s job. Foster greater involvement from different company departments by creating participatory testing environments.

Developing collaborative testing strategies may encompass:

Live session observation. Team members may observe using observation rooms.

Rotating facilitation positions. Each team member may get a chance to facilitate.

Collaborative sessions. Analysis and evaluation sessions are conducted right after tests.

Shared Testing repositories. Repositories containing recordings or highlights of the tests conducted are accessible by the public.

"Testing tour guides" of sorts, who explain fragments of the test to new observers step by step and ensure they are kept in the loop.

At the payment processor Stripe, it is mandated that product managers, designers, and engineers observe at least two user testing sessions every quarter. They have to be exposed to the user interactions and challenges. This policy ensures that the technical teams are in touch with user behaviors.

How Testing Insights Impact Development Decisions

The most productive forms of testing are those which most influence product decisions. Ensure that testing insights make a difference to development priorities to enhance validation impact by creating deliberate links.

Creating links includes:

Adding “Usability Evidence” fields in user stories.

Linking development tickets to particular test observations.

Prototyping and validating features before the development stage.

Using the development performed and test results as criteria for acceptance of further development.

Measurement of product success must include testing metrics as success criteria.

Salesforce, an enterprise software company, upholds a “UX Evidence” policy which stipulates all development stories must demonstrate relevance to specific user research or testing for product rationale. This policy helps development priorities to remain unshifted from validated user needs.

Broadening Testing Capabilities Across The Organization

As teams master prototype testing, productivity and validation increases significantly. Expand testing skill capacity at all levels and throughout divisions.

Examples of skill-building approaches include:

Product and engineering team testing techniques workshops.

Facilitation practice sessions with peers evaluating each other's work.

Training sessions on analysis methods for the interpretation of results.

Skills regarding the production of prototypes for rapidly executing test concepts.

Competitive certification programs for the core competencies of testing.

Lyft, the ride-share company, has developed a non-researcher training program called “Research Champion” which equips individuals with fundamental testing skills. This enables product teams to perform basic validation independently and reserve research resources for more intricate investigations.

Taking Your Prototype Testing to the Next Level

Levelling Up Your Prototype Testing These methods will help transform your prototype testing from a mere validation tool into a strategic advantage. Advanced methods of testing provide greater insights and propel product development.

Emotional Response Testing

Testing emotion responsiveness Organizations are now going beyond functional usability to verify how products make users feel, which is essential for adoption and loyalty.

Advanced emotional testing techniques incorporate:

Interaction with facial expression evaluation

Biometric Data Collection With Eye Tracking (GSR, Heart Rate)

Sentiment analysis on the micro-scale

Emotional tracking across user flows

Brand perception via Implicit Association Testing.

Emotional “analytics” has been implemented by Netflix for product testing and not only do they check whether users know how to operate interfaces, they measure feelings as well. Their studies indicate that there is a much stronger correlation of retention with emotional response metrics as opposed to traditional means of evaluating functionality.

Testing over prolonged periods is called: Longitudinal Testing Programs

With single session testing, only first reactions are captured. Perceptions and behaviors that might evolve over time are not accounted for. In longitudinal programs, the user's interaction with prototypes over prolonged periods is tracked, uncovering repetitive patterns that are undetectable within brief time frames.

Some effective approaches I found for longitudinal research include:

1-2-week unrestricted diary studies with prototype access

Sequential testing at defined intervals

Progressive introduction of features for adaptation measurement

Comparison of usage over time

Behavioral habit formation tracking for critical actions.

For major features, LinkedIn allows participants to shape watch what they want in “30-day prototype immersions.” Participants get prototype environments that they use alongside other products and experience. This provides a window into the adoption pattern and integration of features into workflows that short-term testing cannot reveal.

Analytics Embedded in Interactive Prototypes

Today’s prototyping technologies allow the embedding of analytics which creates a measurement feedback loop within testing environments. Such analytics offer objective measurements of prototype usage during participation across large groups.

Some key analytics include:

Heat mapping of interaction concentration.

Navigation path analysis.

Time-on-page measurements.

Feature discovery rates.

Abandonment point identification.

A/B variant comparison metrics.

Shopify’s e-commerce platform integrates analytics internally into their high-fidelity prototypes which permits testing with hundreds of users at once, enabling the identification of quantitative patterns that qualitative testing on small-samples would overlook.

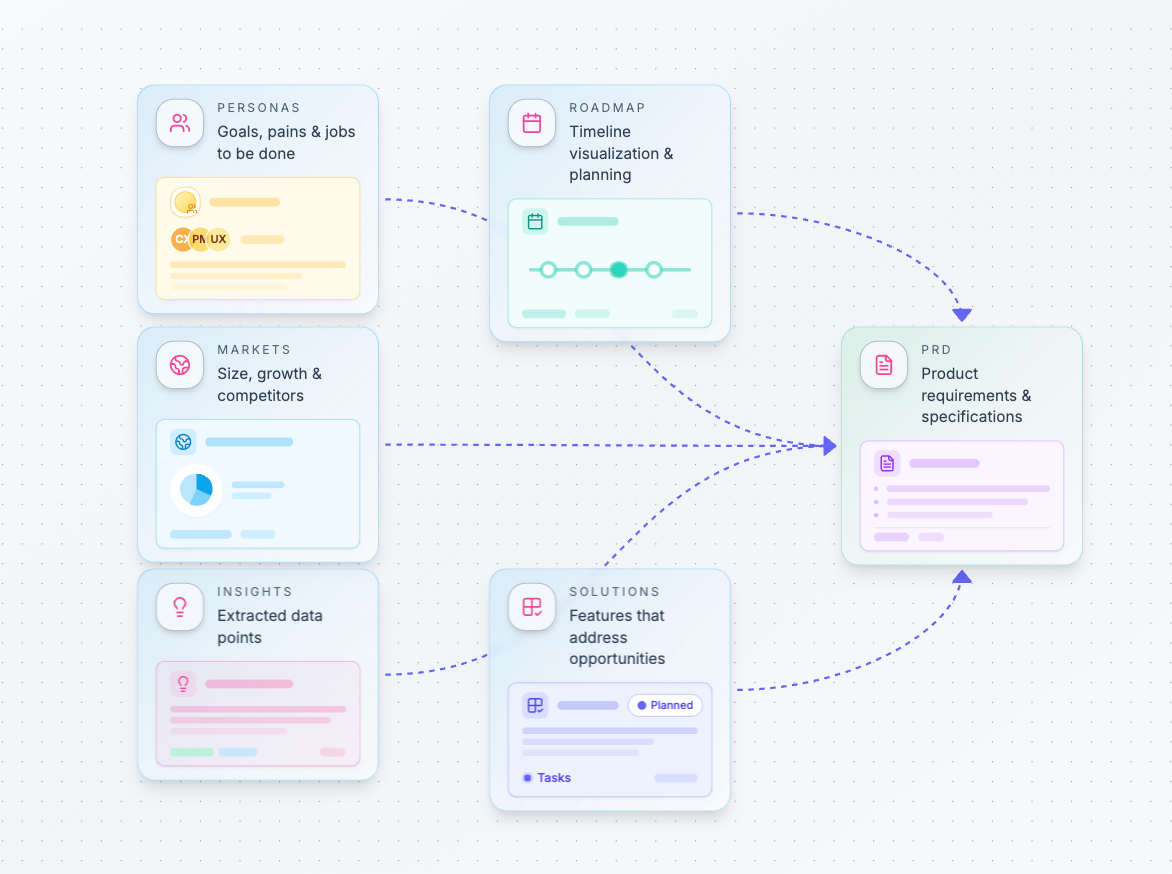

Many advanced companies focus on product design and development now attempt to seamlessly link the testing of the prototypes to their product discovery and design systems in order to streamline workflows from assumption definition to testing, validation, and prioritization of development work. These systems enable efficient learning through the validated flow within the product development processes.

Important Points To Remember About Testing Prototypes in a Product Discovery Stage

Ensure the fidelity of the prototype matches your specific objectives and goals for learning.

For thorough verification, integrate several methodologies into one test.

Assure your focus is centered on behavioral observation and not opinion gathering.

Establish consistent testing rhythms to make validation checking routine.

Explicitly anchors insights drawn from the tests into decision-making processes within the development stage.

Foster the entire organization's capability to perform tests.

Taking into account the emotional reaction and functional usability.

Interested in transforming how you conduct prototype testing? Our product management platform powered by AI and machine learning uniquely enables teams to create, tailor, test, and iterate on prototypes in real-time—automatically linking insights to development environments, ensuring product decisions are made based on real user needs, validated through testing, instead of internal assumptions.

Get in touch with us today for a demonstration on how we are enabling leading product teams to transcend the limits of testing prototypes as a scheduled milestone and shift toward viewing it as an ongoing exploration.

ultimate product discovery

ultimate product discovery